During the spring semester and into the summer of 2020, Studio X staff conducted eight half-hour long interviews with faculty members currently working with immersive technologies to inform our fall 2020 pilot programming. We spoke with faculty across the following disciplines: engineering, history, digital media studies, and education. We view these initial conversations as ongoing, and we hope to expand beyond the limitations of the small sample.

Q1: How have you engaged XR through your own research and/or in your classes and other student-centered work?

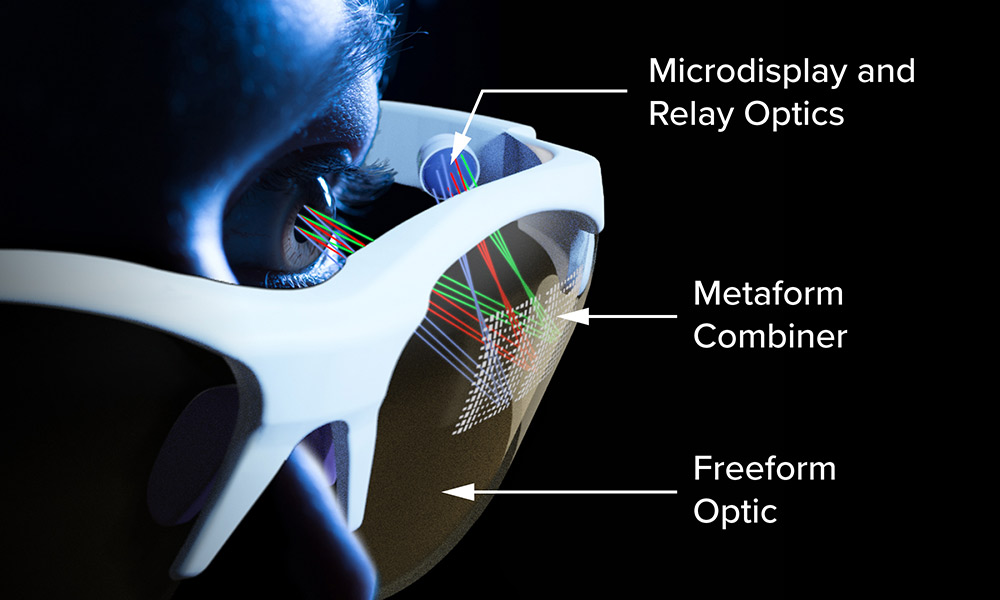

Faculty discussed their research projects as very interdisciplinary, requiring diverse perspectives and expertise. Several faculty members discussed being more interested in what XR can facilitate rather than the tools and methods themselves, especially when this comes to teaching and learning. They want to address real world problems and leverage XR for active learning opportunities. Faculty also discussed generating content for future research such as assessing tools to guide future development.

Q2: What platforms and skills do your students require to participate in your courses and/or research that leverage XR?

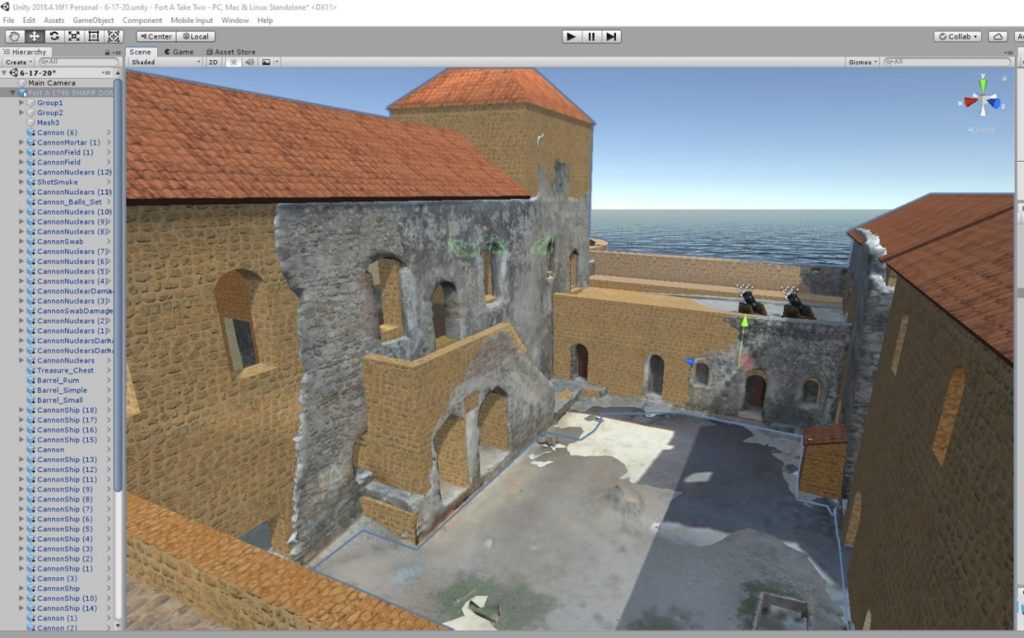

Nearly every faculty member mentioned Unity 3D and its steep learning curve.

Q3: How do students become involved with XR?

Q4: What does a community of practice look like for XR@UR?

Q5: Where do you find inspiration for new ways of teaching, innovative tools, or exciting projects?

Q6: Imagine you have enough funding to work on an XR project with a small group of students. What projects might you choose?

Half of faculty described expanding on current research projects such as generating more content for assessment or making projects more usable. Several faculty members also discussed creating specific XR experiences such as developing AR walking tours centered on social justice topics and designing machines virtually, so one could see the inside of how they operate.

Q7: What challenges do you encounter when engaging with XR?

Faculty discussed their frustrations with the steep learning curve of XR tools and getting students acquainted at an early stage, so they are prepared for more advanced coursework. Faculty find they often must teach students the basics themselves or rely on their graduate student collaborators, who might have no other reason to learn the tool/method. Several participants emphasized the value of resident expertise and introductory, low-stakes trainings.

Access to enough of the same equipment in the same space is also a barrier. Faculty discussed running experiments and struggling to locate the same versions of VR and MR headsets, which are cost-prohibitive. Their research also often requires dedicated, long-term space, and setting up these unique environments can take hours of work before they can even begin to develop. The technology is also rapidly evolving, requiring users to constantly relearn it not to mention maintaining cross-platform compatibility and addressing storage issues.

XR also has a PR problem in that most do not understand its value or see themselves as users let alone creators. One faculty member mentioned that XR seems overly complicated, unrelatable, and not something that everyone is ready to integrate into their courses. Faculty, staff, and students need to see more use cases to pique their interest as well as have access to the costly equipment. Moreover, the timeless debate between theory and practice endures. At a theory-driven institution such as that of UR, hands-on making and skill building remains a challenge.

Q8: Is there anything we should keep in mind?

Beginner Friendly

Provide introductory workshops and early onboarding opportunities for students

Facilitate Interdisciplinary Work

Support all disciplines & collapse departmental silos

Faculty Development

Create new opportunities, space, and time for faculty to experiment

Think Outside the Box

Push boundaries, take risks, & make challenging interventions. Studio X is a cross-unit initiative that can help to balance theory and practice.

Be the Hub for Immersive Technologies @UR

Stay up to date on XR news @UR and beyond and share out

Practical Advice

Host group events and classes, etc.