A Collaboration between Rossell Hope Robbins Library and Mary Ann Mavrinac Studio X

Travel back to medieval times and learn how to turn base metals into gold and unlock the power of the philosopher’s stone. Featuring authentic 17th-century texts from the Robbins Library’s collection—brought to life with stunning visuals and realistic simulations—Aurum (Latin for gold) will make you feel like a true alchemist as you mix potions and discover the secrets of this ancient craft.

Table of Contents

Background

History of Alchemy

Alchemy has existed across various cultures and regions throughout history. Its goal is to understand the science of natural substances, their changes, and their transformations. While you might be picturing someone turning base metals into gold or creating some kind of healing potion, many alchemical texts are linked to spiritual and philosophical enlightenment.

Western alchemy originated with a close relationship between mysticism and metallurgy in ancient Egypt around 4,000 years ago. Everything we know of Egyptian alchemy is in the writings of ancient Greek philosophers—most of whose writings only survived in Islamic translations.

In China, alchemy was primarily connected to medicine. Alchemy was known as waidan (external alchemy) and neidan (internal alchemy). Waidan was focused on creating elixirs of immortality and using minerals and metals to treat diseases, while neidan was used for spiritual transformation and the cultivation of the inner self.

In India, alchemy was known as rasayāna, which literally means “the path of the juice” or “the path of essence.” Rasayāna was associated with a system of medicine that used mercury as a core element of its operations, compounds, and medicines. Rasayāna aimed to heal people who were too sick to recover and to increase people’s life spans.

In the Islamic world alchemy (ilm al-kimiya) flourished during the Islamic Golden Age. Heavily influenced by Greek and Egyptian alchemy (most of what we know of earlier traditions is through Islamic translations and commentaries), Islamic alchemists were responsible for major discoveries that furthered alchemy and other sciences, including hydrochloric, sulfuric, and nitric acids, potash, soda, and the technique of distillation. They also made significant advancements in the fields of chemistry, pharmacology, and metallurgy.

The alchemy that developed in Europe during the Middle Ages was heavily indebted to the Islamic tradition, through which European alchemists encountered the works of ancient Greek and Egyptian alchemy. Alchemy was deeply tied to religion and philosophy, and many influential alchemists were also priests, monks, or friars. By the end of the Middle Ages, however, alchemy was more associated with occult practices. In the 16th and 17th centuries, alchemy was studied alongside magic, medicine, and what we now think of as modern science, particularly chemistry. Many scientists such as Robert Boyle, Isaac Newton, and Tycho Brahe were also alchemists. While the pursuit of transmutation and the creation of an elixir of life were eventually abandoned, alchemy played a significant role in the development of modern science.

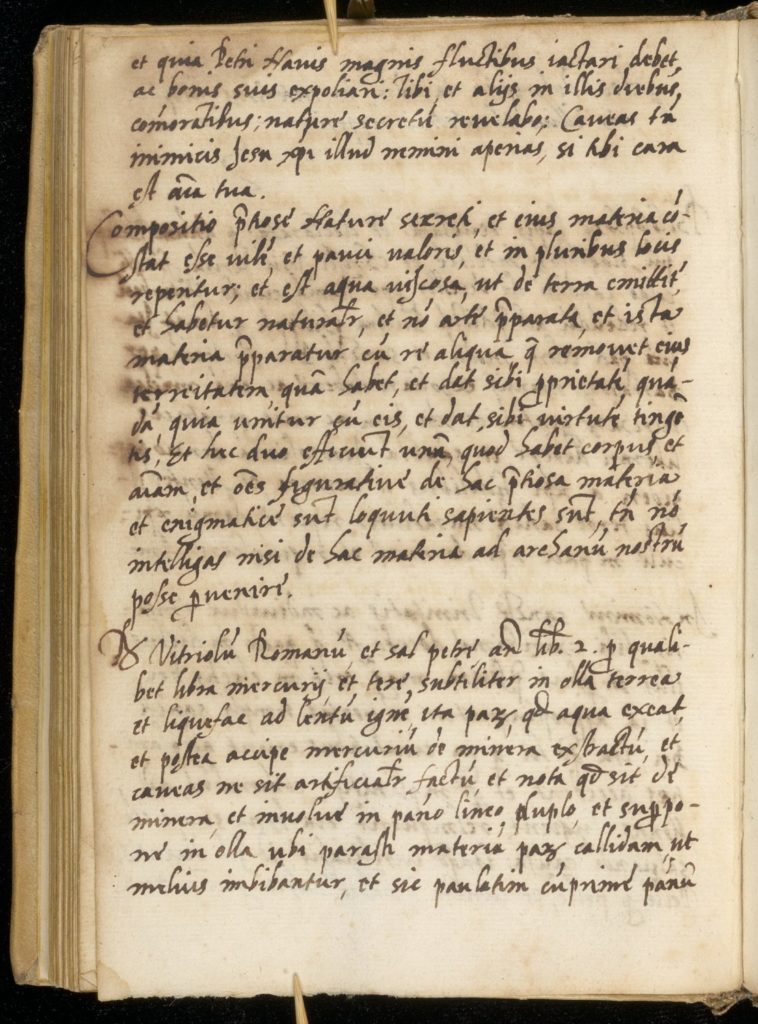

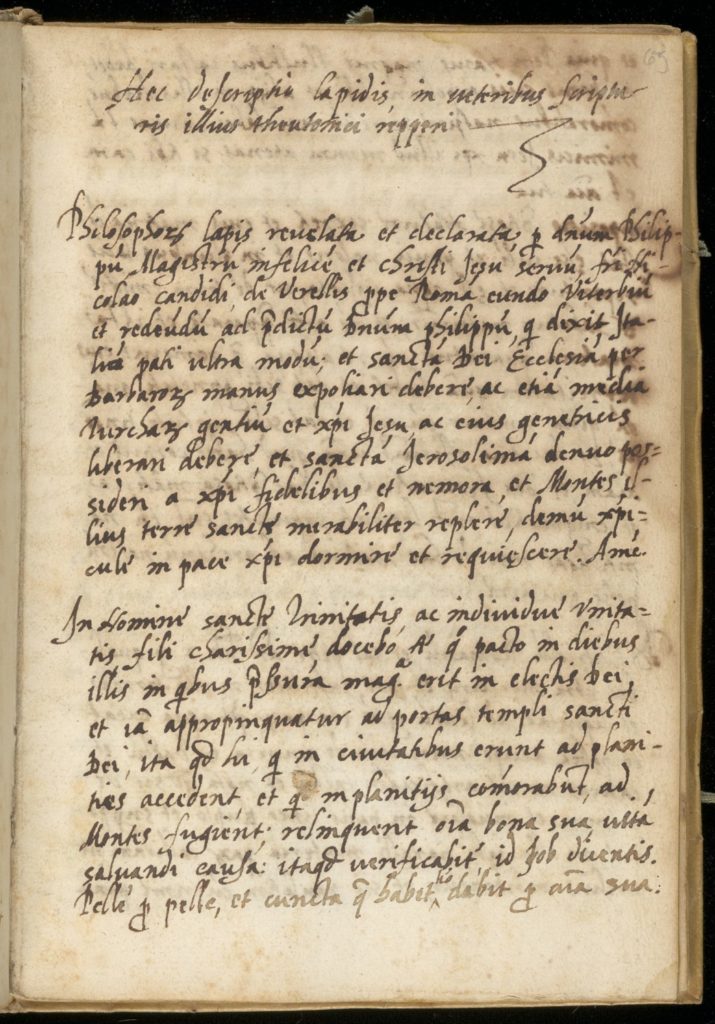

Robbins Library Manuscript

This manuscript is a personal collection of alchemical texts from 17th-century Italy, containing more than a dozen recipes, many of which involve the creation of the philosopher’s stone.

Our research team has transcribed and translated the texts from the manuscript, which were originally written in both Latin and Italian. These texts include some of the most popular alchemical treatises from the late Middle Ages and early modern periods.

While the original owner of the manuscript remains unknown, it’s highly likely that the owner was an alchemist who gathered materials from their own experiments and their colleagues.

Through Aurum VR, you can step into the shoes of an alchemist and explore the recipes found in the alchemical miscellany manuscript, gradually unraveling the secrets of the ancient craft.

Contributors

Management Team

Modeling Team

Coding Team

Story Team

Past Contributors

Mission

We aim to expand awareness of and access to our library’s diverse collections through transformative and innovative methods.

PROJECT GOALS

- Reimagine our library’s collections. Imagine if you were able to interact with a text in which you could tap on a word to define it, engage with complex visualizations to illustrate concepts, recreate the recipes from a first-person perspective.

- De-westernize the perception of alchemy and showcase its rich global history and relevance to a contemporary audience.

- Transform teaching and learning. Instead of reading about alchemy and imagining the laboratories, tools, recipes, and experiments, what if you could carry out the experiments yourself within a medieval laboratory setting? How would mixing the ingredients and handling the tools impact your understanding of this history?

- Embrace interdisciplinary collaboration. This project brings together history, science, art, and technology to create something remarkable.

- Foster hands-on, skill-based, project-based learning opportunities. Students learn XR software and hardware, collaborate in teams, conduct research in a meaningful way.

Values

- We are committed to showcasing the diverse and global history of alchemical practices and promoting a more inclusive and expansive view of history.

- We strive to make our VR alchemist laboratory experience accessible to everyone, regardless of background or ability, and are dedicated to creating a comfortable and enriching environment for all.

- We seek to engage participants in an immersive and interactive learning experience, drawing on the rich resources of the Robbins Library.

- We aim to leverage cutting-edge technology and modern design practices to bring the ancient art of alchemy to life in a new and exciting way.

- We value the contributions of multiple stakeholders, including the Robbins Library and user feedback, to create a rich and authentic experience.

- The project is dedicated to presenting a nuanced and global representation of alchemy, drawing on historical research and expert knowledge to ensure that the experience is based in diverse historical practices while taking creative and magical liberties to enrich storytelling.

About the project

story

You are Kiana, a career alchemist in a small town. By day, you run a sufficiently successful business fulfilling the townsfolk’s more mundane orders—dyes and rat poisons—but you can’t help wondering if, with enough practice, you might be able to uncover the secret of the famed philosopher’s stone in the score of alchemical texts your father left to you.

adventures

- Explore 2 floors of the alchemist’s tower and the equipment available in your journey to master the craft.

- Follow the step-by-step recipe to create a purple dye potion.

- Experiment with heating and mixing potions available in your lab.

access features

TELEPORT

- A locomotion technique which provides users with instantaneous transport from one location to another without having to move physically

- Reduces the risk of inducing motion sickness, a common issue for VR applications

- Provides an additional layer of comfort for players who may feel disoriented or overwhelmed in large virtual spaces

- Creates navigation options for wheelchair users or those who may have difficulty with precise or rapid movements, such as those with fine motor skill impairments

SNAP TURN

- Allows users to change their viewpoint without physically turning their body. This can be helpful for wheelchair users, those with mobility aids, or those who may have difficulty with sustained physical movements, such as chronic pain or fatigue

- Reduces the risk of motion sickness for users who experience discomfort when turning in VR

HEIGHT ADJUSTMENT

- Allows users to adjust the environment to their height, including seated modes

FORCE GRAB

- Allows users to teleport an object to their location to “grab” it. This can be helpful for users who may have difficulty with fine motor control or precise movements

- Reduces the risk of motion sickness or discomfort some users experience when using traditional grab methods in VR games

SETTINGS MENU

- Provides users with an easy way to adjust game settings and access through features such as the ability to adjust the height, choosing skin color, and choosing between smooth and snap turns at any point of the game

external resources used

HurricaneVR – Physics-based interaction toolkit package for Unity development

Timeline

Fall 2021

- Collaborative work between Studio X and the Robbins Library to create a shared vision for the project

- Digitization of the Alchemical miscellany manuscript by Lisa Wright in the Digital Scholarship Digitization Lab

- Research conducted on alchemical practices and notable alchemical achievements

- Contextual documentation and project management systems and tools established

- World building activities, such as a Dungeon and Dragons night

Spring 2022

- Training on project tools (e.g., Unity and Blender)

- Readings and discussion on a variety of topics, including VR and accessibility, nonwestern histories of alchemy, gaming and gender, etc.

- World building activities, such as sketching, creative writing, and discussion

- 3D models with materials created by each student of alchemical equipment in Blender and imported into Unity

- Scene creation in Unity and locomotion and grabbables implemented

- Implementation of access features and settings menu in Unity

Fall 2022

- Sub-teams (3D modeling, coding, and story) established

- Heating and glass breaking features developed

- Experimentation with custom shaders

- Showcase of the project for Rochester Institute of Technology’s annual Frameless XR Symposium

Spring 2023

- Sub-team leads established

- Animation of selected models

- Liquid simulation implementation

- Full step-by-step recipe implementation

- 3D modeling and texturing for alchemical ingredients

- Translation of 5 recipes from Robbins Library manuscripts

- Design and implementation of user interface and welcome screen in Unity

- Research and planning on VR accessibility for neurodivergent users

Vision

The current project offers an immersive and engaging introduction to the fascinating world of alchemy. However, we have ambitious plans to expand and enhance the experience even further in the coming semesters.

- Integrate nine more alchemical recipes, including the creation of gold, pearls, and the elusive philosopher’s stone. These additions will provide users with an even more comprehensive understanding of the intricacies of alchemy and allow them to experiment with a wider range of materials and techniques.

- Expand the overarching story of the game and weaving in more historical context.

- Add textured 3D models of recipe ingredients, equipment, and space decorum, making the experience even more immersive and engaging for users.

- Conduct user testing, including collaborating with the Office of Disabilities to ensure accessibility for all users.

Through these enhancements, we aim to create a comprehensive and inclusive experience. We are excited to embark on the next phase of Aurum VR and look forward to sharing our progress with you.

Final Notes

We will provide updates as we continue to expand and refine our immersive experience, but don’t hesitate to reach out if you have any feedback or suggestions! If you’re eager to try the experience for yourself, come visit us at Studio X on the first floor of Carlson Library, where our team will be happy to guide you through the alchemical adventure of a lifetime.