There is a strong emphasis on fostering cross-disciplinary collaboration in extended reality (XR) at Studio X. Over 50 researchers across the UR use XR technology for their research and teaching, and many come to Studio X for consultation and advice in either program development or engineering. As an XR Specialist at Studio X, I got the opportunity to work on two XR-related research projects during the past summer, one in collaboration with the Brain and Cognitive Science Department (BCS), and the other with the Computer Science Department (CS). Through the Office of Undergraduate Research, these projects were supported by a Discover Grant, which support immersive, full-time summer research experiences for undergraduate students at the UR.

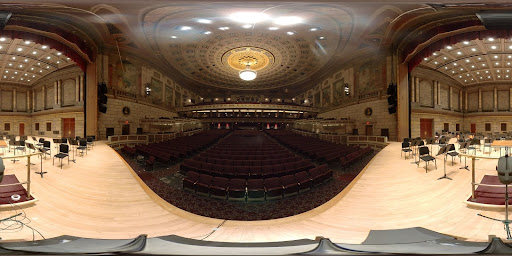

The research with BCS includes digitizing the Kodak Hall at the Eastman School of Music and bringing it into VR. The result will be used to provide a more realistic environment for conducting user testing to better study how humans combine and process light and sound. The visit to Kodak Hall was scheduled way back in March. Many preparations had been done before the visit that included figuring out the power supply and cable management, stage arrangement, clearance, etc. One discussion was had on what techniques will be used to scan and capture the hall. Three object scanning techniques were tested before and during the visit: photogrammetry, 360-image, and time-of-flight (ToF).

Photogrammetry creates 3D models of physical objects by processing photographic images or video recordings. By taking images of an object from all different angles and processing them with software like Agisoft Metashape, it is possible for the algorithm to locate and map key points from multiple images and combine them into a 3D model. I first learned about this technique by attending a photogrammetry workshop at Studio X led by Professor Michael Jarvis. This technique has been very helpful for the research since we are able to get great details on the mural in Kodak Hall, at which other techniques had failed.

360-image, as its name suggests, is a 360-degree panoramic image taken from a fixed location. With the Insta360 camera borrowed from Studio X, the capturing session requires almost no setup whatsoever and can be quickly previewed using the app on a phone or smart device.

The Time-of-Flight (ToF) technique shoots light and calculates the time it takes for the light wave to travel back from the reflection in order to get the depth information. Hardware using the ToF technique can be easily found on modern devices, such as iPhone and iPad with Face ID. I tested the ToF scanner on the iPad Pro at Studio X. It provides a great sense of spatial orientation and has a fairly short processing time.

We used the Faro Laser Scanner in order to get a scan with higher accuracy and resolution. Each scan took 20 minutes, and we conducted 8 scans to cover the entire hall. The result is a 20+ GB model with billions of points. In order to load the scene to the Meta Quest 2 VR headset, we shrunk down the size and resolution of the model dramatically using tools such as gradual selection, adjusting the Poisson distribution, material paint, etc. We also deleted excessive points and replaced flat surfaces with better quality images such as the stage and mural. The end result is a nice-looking model with decent details around 250MB, good for the headset to run.

The model was handed over to Shui’er Han from BCS as a Unity package, where she is going to implement the audio recording and spatial visualization before conducting the user testing. It is amazing to see many people working and bringing together their experience and knowledge in making this cross-disciplinary project to reality. I would like to thank Dr. Duje Tadin, Shui’er Han, Professor Michael Jarvis, Dr. Emily Sherwood, Blair Tinker, Lisa Wright, Meaghan Moody, and many more who gave me the amazing opportunity to work on this fun research and all the help they provided along the way. I can’t wait to see what they can achieve beyond this model and research project.

You can read more about this cross-disciplinary collaboration here.