By: Tamuda Chimhanda

Introduction

As a proud alumnus of the African Leadership Academy, an esteemed institution in Johannesburg dedicated to cultivating the next generation of African leaders, my recent trip to Nairobi, Kenya, for the Networks Conference held a special significance. My name is Tamuda, and I am currently a computer science student at the University of Rochester. The conference, organized by my alma mater from November 21 to 23, was not just a professional engagement but also a heartfelt homecoming.

Representing Studio X, I was there to explore and discuss the burgeoning potential of XR in healthcare across Africa. The event brought together over a hundred participants from various parts of the continent, encompassing diverse sectors such as education, health, infrastructure, and agriculture. These attendees, including teachers, students, consultants, and medical practitioners, mostly new to virtual reality, presented a unique canvas to showcase the innovative work we’re doing at Studio X.

For me, attending this conference was more than just a professional commitment; it was a journey back to my roots. The African Leadership Academy nurtured me. Now, as a specialist in XR technology, returning to this nurturing ground to talk about healthcare opportunities for African youth felt like coming full circle. It was an opportunity to give back to the community that played a pivotal role in shaping my aspirations and values. Sharing knowledge about XR in healthcare and witnessing the impact of this technology on my fellow Africans was not only a professional milestone but also a deeply personal and fulfilling experience.

Key Discussion Points:

Representing Studio X, I was there to explore and discuss the burgeoning potential of XR in healthcare across Africa. The event brought together over a hundred participants from various parts of the continent, encompassing diverse sectors such as education, health, infrastructure, and agriculture. These attendees, including teachers, students, consultants, and medical practitioners, mostly new to virtual reality, presented a unique canvas to showcase the innovative work we’re doing at Studio X.

Training and Surgical Specialization:

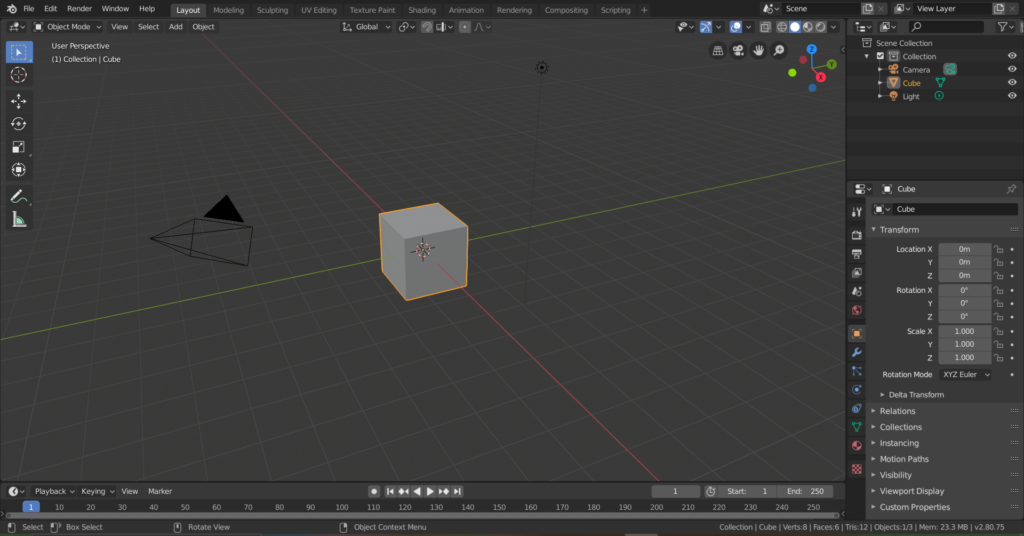

Traditionally, medical training involved learning with cadavers and observing experienced surgeons. However, specialization demands extensive, repeated practice, which is time-consuming and expensive. VR offers a viable alternative with its ability to create hyper-realistic, repeatable training scenarios.

Features of XR in Healthcare:

- Hyper-realistic Simulations: XR provides an immersive environment that closely mimics real-life scenarios.

- Remote Learning: It enables trainees to learn from global experts, like collaborating with professionals from India, without geographical barriers.

- Mistake-Friendly Environments: XR allows trainees to make mistakes without real-world consequences, fostering a better learning experience.

- Cost-Effectiveness: Over time, XR reduces the costs associated with traditional training methods. For example, a cadaver can only be used once.

- Objective Performance Measurement: It offers tools to assess a trainee’s performance and progress accurately before they are legally allowed to perform surgery on an actual patient.

Experience at the Event:

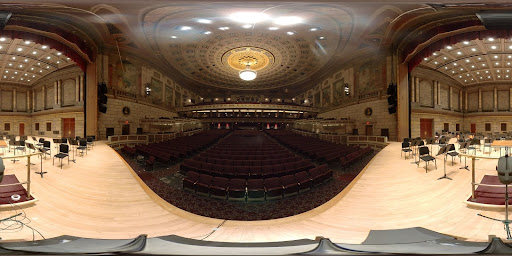

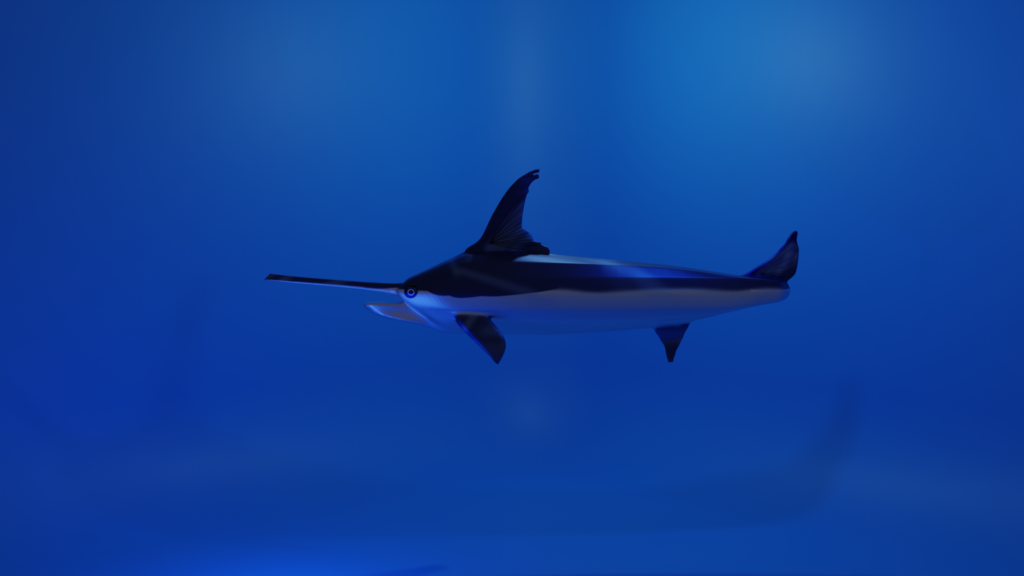

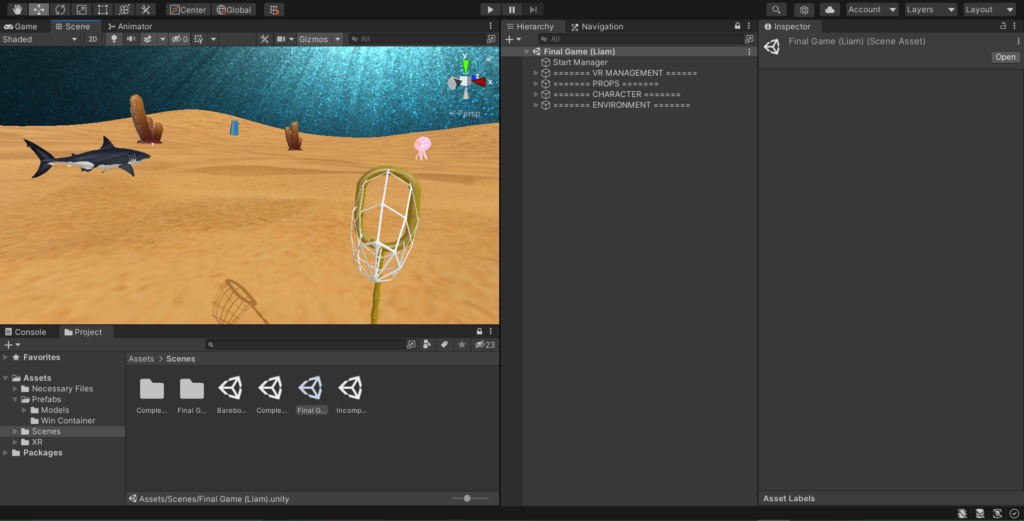

The event was an eye-opener, both for the participants and for us at Studio X. To ensure an immersive and interactive experience, I had brought along Studio X’s VR headsets. I did not want to just talk about the potential of XR technology but to demonstrate it tangibly. Witnessing firsthand the curiosity and excitement of the attendees as they experienced the VR Roller Coaster ride was gratifying. It allowed the participants, who came from diverse backgrounds, to directly engage with the technology, thereby enhancing their understanding and appreciation of its capabilities. The audience provided rich feedback and perspectives, highlighting the vast potential of XR in various sectors.

Participant Questions and Concerns:

- How do you deal with data privacy issues in VR? The headset has a lot of cameras, microphones, etc., and could potentially collect a lot of user data.

- How can we make VR more accessible in remote communities in Africa?

- The technology is not really accessible to Persons with Disabilities.

Participant Kudos:

- “It feels real. I actually felt like I was falling from the rollercoaster.”

- “I loved it. How can we collaborate with your organization to educate or expand access for girls in Kenya?”

- “I definitely see this being used more in the classroom. Students would love it.”

Conclusion:

The Networks Gathering in Kenya was not only a successful event but also a testament to the burgeoning interest in XR technologies across multiple sectors. Studio X remains dedicated to innovating and pushing the boundaries of XR, fostering global collaboration and continuous learning.